1. Introduction - The Elusive Network of Digital Contracts

For more than a decade, the technology community has pursued the vision of a global network of self-executing digital contracts. The idea was elegant: agreements written as code, running automatically, enforcing themselves without intermediaries, and creating a new layer of digital trust. It promised to make business transactions as seamless and reliable as digital communication became when the internet was born.

Yet, despite immense effort, that vision has not materialized in a form that could underpin the real economy. The so-called “smart contracts” of public blockchains have proven powerful for experimentation, but fragile, opaque, and detached from the realities of contractual life. The systems built around them have been prone to errors, exploits, and ambiguity - precisely the characteristics that real contracts are designed to eliminate.

Designing a network of digital contracts has turned out to be far more difficult than designing a network of computers. The reason is not technical complexity, but conceptual confusion. Digital contracts do not merely compute - they must represent obligations, rights, and trust between identifiable parties. That requires not just code execution, but verifiable interaction.

Over the years, several misconceptions have guided the development of “smart contract” systems down the wrong path. The most influential of these was the belief that a contract should be Turing-complete - capable of performing any possible computation. This idea, borrowed from computer science, was misplaced in the world of agreements. Real contracts are finite systems; they have clear states, predictable transitions, and definitive outcomes. There is no room for ambiguity, recursion, or indefinite execution.

The Turing-completeness myth is only one of several conceptual errors that have shaped how developers tried to encode trust into software. The assumption that contracts should be executed by the network, that consensus can produce trust, that immutability equals reliability, or that tokens can stand in for real assets - each of these beliefs contributed to the detour that delayed the emergence of a true digital contract network.

This article revisits those misconceptions, not to criticize the pioneers of the field, but to learn from them. Each misstep reveals an insight about what digital contracts must truly be: verifiable, finite, and rooted in the accountability of identifiable participants. The network of digital contracts we have been seeking all along is not a computational platform - it is a trust infrastructure.

2. The Original Sin - When Computation Replaced Verification

The first generation of digital contract platforms inherited their design assumptions from computer science rather than from law, finance, or human trust. Their creators faced a fundamental question: how can strangers agree on the outcome of a transaction without trusting one another?

Their answer was elegant but misguided. They replaced verification - the process of checking who said what and whether it was true - with computation performed collectively by an anonymous network. Instead of verifiable statements from identifiable parties, they built a shared, immutable database of results that everyone could agree upon. Trust, they believed, could emerge from consensus over computation.

This approach solved one problem while creating several others. Without verifiable claims, the system had no notion of accountability. Every condition, rule, and dependency had to be embedded directly into executable code. To ensure universal agreement, all logic needed to run identically across every node of the network. That requirement gave rise to the notion of the “Turing-complete smart contract” - a piece of code that could, in theory, perform any conceivable computation.

In practice, this meant that the simplest agreement - a payment, an escrow, a delivery confirmation - had to be modeled as a self-contained computer program. It also meant that the network, not the participants, was responsible for executing and validating the transaction. Every node effectively acted as a global notary, recalculating the same results just to ensure everyone saw the same outcome.

This architecture achieved consistency but at the expense of clarity, scalability, and realism. Real contracts do not depend on everyone in the world recomputing their terms; they depend on the participants being identifiable and accountable for their actions. They require verifiable claims, not shared computation.

The absence of verifiable claims was the original sin that set smart contract design on the wrong trajectory. When you cannot verify who made a statement or whether its content is authentic, you are forced to rely on the network’s consensus as a substitute for trust. That is how digital contracts became programs and why trust became an emergent property of code rather than an attribute of human intention.

The result was a paradox: systems meant to eliminate intermediaries ended up turning the network itself into the ultimate intermediary. Trust was no longer derived from parties and their verifiable commitments but from the collective execution of anonymous nodes.

True digital contracts cannot be built on that foundation. They must return to the principle that trust arises from verifiable interaction - from cryptographically signed claims, issued and accepted by identifiable agents, and validated through a transparent sequence of contractual state transitions. Once that principle is restored, the network no longer needs to compute the outcome of a contract. It only needs to verify that every statement is authentic and that every transition is valid.

That is where the real design journey begins - when verification once again replaces computation as the basis of digital trust.

3. Contracts Need to Be Executed by the Network - and Why They Shouldn’t Be

Once computation became the foundation of digital trust, another misconception followed almost inevitably: the idea that contracts must be executed by the network itself. If trust was to emerge from collective computation, then every node had to participate in executing every contract. This became the hallmark of public blockchain architectures - a world computer that would execute anyone’s code for everyone’s benefit.

At first glance, the model appeared elegant. By allowing the network to execute all contract logic, no single participant needed to be trusted. Every result could be confirmed by consensus, and every contract outcome would be equally visible to all. It seemed to offer a kind of mathematical impartiality - trust without trustworthiness.

Yet, this approach ignored the defining property of real contracts: they are executed by the parties who sign them, not by bystanders. A sales agreement is fulfilled by a seller delivering goods and a buyer making payment. An insurance contract is executed by the insurer assessing claims and the insured providing evidence. Each party is both responsible and accountable for its actions. The rest of the world does not need to replay their behavior to verify it.

When a network executes all contracts, the notion of agency disappears. There are no participants, only programs. No responsibilities, only outcomes agreed upon by the crowd. The relationship between identifiable actors - the very essence of a contract - is replaced by an algorithmic mechanism. What was once a legal and social commitment becomes a computational event.

The consequences are significant. Network-based execution is inherently inefficient, as every node repeats the same work. It is also insecure, since any error or vulnerability in one contract affects the entire network. Most importantly, it severs the link between execution and accountability. When no one directly executes a contract, no one can truly be held responsible for its outcome.

The correct model is far simpler and far closer to how the real world operates. Contracts should be executed by their participants and verifiable by their observers. Each agent performs its role according to the agreed terms and provides cryptographically signed evidence of that performance. The contract’s job is not to perform actions on behalf of its parties but to verify that each participant has done what they committed to do.

This principle restores the natural order of trust. A digital network of contracts does not need to re-execute every transaction globally. It only needs to make each participant’s actions verifiable to those who depend on them. Once every agent’s claims are signed, traceable, and checkable, the need for network-wide execution vanishes.

In such a model, the network becomes what it should have been from the start - not an executor, but a verifier. The system’s integrity arises not from collective computation but from distributed accountability. Each agent is responsible for its own behavior, and the network ensures that responsibility cannot be faked, denied, or obscured.

That shift - from collective execution to individual verification - transforms digital contracts from code into commitments again. It marks the first step toward a truly decentralized trust infrastructure, where agents, not algorithms, are the authors of transactions.

4. Consensus Produces Trust - and Why It Doesn’t

Once the network became the executor of contracts, it also became the source of truth. The logic was simple: if all nodes agree on the outcome, the outcome must be correct. Consensus, in this view, was trust - a mathematical substitute for human judgment.

This belief shaped an entire generation of digital transaction systems. It gave rise to vast, resource-intensive networks where thousands of machines continuously compete to agree on the same facts. These networks succeeded in producing globally consistent ledgers, but they did not produce trust. What they produced was uniformity of record, not authenticity of behavior.

Consensus is a powerful coordination mechanism, but it is not a trust mechanism. It guarantees that everyone agrees on what was recorded, not on whether it was true. If a fraudulent or erroneous statement is submitted to the network and accepted through consensus, it becomes part of the shared history regardless of its accuracy. Agreement, in itself, does not validate truth.

In real-world transactions, trust is never established by counting votes. It is established by verification: checking signatures, confirming data integrity, validating credentials, and ensuring that each action was taken by an authorized party. A bank does not rely on global consensus to confirm a payment; it verifies the authenticity of the payer’s instructions and the sufficiency of their funds. A court does not decide a contract’s validity by popular vote; it verifies evidence and intent.

By treating consensus as the source of trust, early digital contract platforms inverted the relationship between agreement and authenticity. They assumed that if everyone agrees on the data, the data must be authentic. In reality, the sequence should have been the opposite: only once authenticity is verifiable does agreement become meaningful.

This inversion also imposed unnecessary complexity. Achieving consensus among thousands of anonymous nodes requires continuous synchronization, leading to latency, cost, and fragility. Worse, it conflates agreement on facts with agreement on trust. Real trust does not require everyone to agree; it only requires that each participant can verify what matters to them.

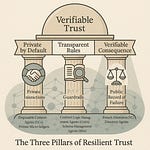

In a verifiable network, trust emerges not from majority consensus but from cryptographic independence. Each participant verifies the authenticity of every claim it relies upon, using signatures, credentials, and proofs. There is no need to ask anyone else for permission to believe what you can verify yourself.

The transition from consensus-based to verification-based trust may seem subtle, but it is foundational. Consensus creates shared belief; verification creates shared truth. The former is collective and fragile, the latter individual and durable.

When verification replaces consensus, digital trust stops being a social agreement among machines and becomes an intrinsic property of the data itself. Every signed claim carries its own proof of authenticity, and every transaction can be validated independently by any related party. That is how digital networks become self-verifying - not because everyone agrees, but because no one has to.

Only when that distinction is understood can digital contracts evolve beyond the illusion of computational trust toward the reality of verifiable trust.

5. Tokens Represent Assets - and Why They Don’t

When the network became both the executor of contracts and the source of trust, a new abstraction quickly followed: the token.

Tokens were introduced as the digital equivalent of assets - a way to represent value, rights, or ownership within a system where trust had to be computed rather than verified.

If consensus could determine truth, then perhaps it could also determine possession.

In principle, the idea seemed sound. A token could symbolize anything: money, property, equity, or access. Whoever held the token, owned the right it represented. Transfers could be programmed, ownership could be tracked, and scarcity could be guaranteed by the network itself. It was a seductive promise - assets without issuers, transactions without intermediaries, value without context.

Yet, this too was a misconception.

Tokens can represent records, but they cannot represent relationships. Real assets - deposits, loans, invoices, shares - are defined not by the mere existence of an entry, but by the contract that governs them. They are the product of legal, financial, and institutional relationships among identifiable parties. A token may encode transferability, but it cannot encode accountability.

Ownership, in the real world, is a claim backed by evidence and law. It is always conditional - on payment, delivery, performance, or compliance. Tokens, by contrast, are unconditional by design. Once transferred, their meaning depends solely on the network’s collective record, not on any verifiable agreement among parties. That abstraction severs the link between digital representation and real-world rights.

The problem deepens when tokens attempt to model regulated financial instruments. A share of stock, for instance, is not just a transferable item. It conveys voting rights, dividends, disclosure obligations, and jurisdictional constraints. All of these are contractual commitments among multiple parties. Turning them into tokens strips away their contractual substance, leaving only a mechanical residue - a record of who holds what, divorced from why.

In the absence of verifiable claims, tokenization became a substitute for context. The network treated possession of a token as proof of entitlement because it could not verify the underlying contract.

It was a workaround for missing trust primitives.

The correct foundation for digital value is not tokenization but verifiable contractual claims.

An asset should exist because an accountable issuer - a bank, a company, or an individual - has signed a verifiable statement defining its terms. Its ownership should change when that issuer or another authorized agent issues a new verifiable claim transferring rights to a new holder.

In that model, every unit of value has provenance, purpose, and proof - not just a record in a ledger.

Tokens made assets programmable, but they also made them abstract.

Verifiable claims make assets accountable.

They restore the essential link between who, what, and why - the triad that defines real value.

A network of digital contracts cannot rely on tokens to express ownership or trust. It must rely on signed obligations and verifiable rights.

Only then can digital assets behave like their real-world counterparts: as parts of enforceable relationships, not as self-referential entries in a shared database.

6. Immutability Equals Trust - and Why It Doesn’t

When networks began to struggle with the absence of accountability, a new principle was introduced to compensate: immutability.

If the content of the ledger could not be altered, it was reasoned, then its integrity could be trusted. In this model, trust was no longer about who made a statement, but about the certainty that no one could ever change it afterward.

This belief gave rise to one of the most persistent myths in digital transaction design: that immutability guarantees trust.

It doesn’t. It guarantees only permanence.

Immutability protects data from being rewritten, but it does not make the data true, fair, or lawful. A false statement, once immutably recorded, remains false forever. An error, once accepted, becomes permanent. In real-world transactions, the ability to correct mistakes, amend terms, and adapt to new circumstances is not a weakness - it is a requirement for justice and functionality.

Contracts are living instruments. They evolve through negotiation, amendment, renewal, and sometimes reversal. Every meaningful business relationship depends on this ability to change with mutual consent. The notion that code or data should be immutable misunderstands the nature of trust: trust depends on transparency of change, not on prohibition of change.

The proper objective is not immutability but accountable mutability - a system where every modification is verifiable, every revision is signed, and every previous state remains accessible for audit. What matters is that no change can occur without the explicit authorization of those entitled to approve it, and that the evidence of that authorization can be independently verified.

In such a framework, history is not frozen but versioned. Each transition becomes an additional verifiable claim, linking past and present states in a continuous chain of accountability. Trust arises from the assurance that every change is legitimate and traceable, not from the impossibility of change itself.

The pursuit of immutability also revealed a deeper misunderstanding: a confusion between data integrity and trust integrity. Data integrity ensures that information has not been altered. Trust integrity ensures that the right people have made the right decisions according to agreed rules. The former can be achieved by cryptography; the latter requires governance - or, in the case of digital contracts, verifiable authorization among identifiable agents.

When a system prioritizes immutability over accountability, it locks errors and injustices into its core. When it prioritizes accountable mutability, it allows evolution without losing verifiability. The difference is not technical but philosophical. It marks the shift from “nothing can change” to “nothing can change without proof.”

Trust does not live in permanence; it lives in the integrity of change. A network of digital contracts must, therefore, preserve the freedom to amend, correct, and adapt, while ensuring that every such act leaves an indelible, verifiable trace.

That is the essence of a trustworthy system: not immutability, but accountability - a living record of authorized transformation.

7. Anonymity Ensures Fairness - and Why It Doesn’t

When public trust in centralized intermediaries began to erode, a powerful idea took hold: that removing identity from the equation would make digital systems more fair. If no one could be distinguished from anyone else, then no one could be privileged or discriminated against. Fairness, it was believed, would emerge from anonymity.

This notion aligned perfectly with the ethos of early decentralized systems. Transactions between pseudonymous addresses seemed to embody equality - every participant interacting under the same conditions, every action visible to all, and no authority capable of favoring one over another. In that sense, anonymity appeared to democratize trust.

Yet, anonymity also removes the very element that makes trust possible: responsibility.

When no one is identifiable, no one can be held accountable. Agreements lose their enforceability, obligations lose their meaning, and intent becomes unverifiable. What remains is a network of transactions without actors - a mechanical system of value transfer detached from human or institutional integrity.

In real economies, fairness does not come from uniform ignorance of identity. It comes from verifiable accountability combined with controlled privacy. Knowing who you are dealing with does not create bias; it creates responsibility. What must be protected is not anonymity, but the right to disclose only what is necessary to complete a transaction.

Digital trust networks should therefore be built on selective verifiability rather than anonymity. Each participant should have a verifiable identity - a digital counterpart to their legal or organizational self - yet retain control over how and when that identity is revealed. Through mechanisms like verifiable credentials, a participant can prove specific facts (“I am licensed,” “I am authorized,” “I am solvent”) without exposing everything about themselves.

Such controlled disclosure restores fairness through symmetry: each participant is equally empowered to protect their privacy while still being verifiably accountable for their actions. No one is forced to trust blindly, and no one can act without leaving a verifiable trace of authorship.

Anonymity, by contrast, erodes fairness over time. It invites abuse, because unidentifiable actors face no consequences for deception or manipulation. It also undermines trust, since honest participants have no way to distinguish integrity from fraud. Systems built on anonymity often end up reintroducing centralized control mechanisms to mitigate the resulting risks - a paradox that defeats the very principle they were designed to uphold.

Fairness and trust are not products of obscurity; they are products of symmetry and verification.

In a network of verifiable agents, each participant stands on equal footing - identifiable, accountable, and in control of their own disclosures. That balance is what enables both privacy and integrity to coexist.

The future of digital trust will not be anonymous. It will be verifiably private. Each transaction will preserve dignity and accountability in equal measure - proving not that participants are hidden, but that their actions are honest.

8. Smart Contracts Are General-Purpose Programs - and Why They Shouldn’t Be

Perhaps the most persistent misunderstanding in the evolution of digital contract systems is the belief that contracts should behave like general-purpose software. From that belief came the idea that a contract must be Turing-complete - capable of performing any computation that can be described in code. It was an idea borrowed from computer science but misplaced in the world of trust and agreements.

In computer science, Turing-completeness is a measure of expressiveness. It defines whether a language can describe all possible algorithms. But contracts are not algorithms; they are structured commitments between identifiable parties. A contract does not need infinite expressive power - it needs clarity, determinism, and verifiability.

In real life, every contract is a finite state machine. It begins in a defined state, transitions through a predictable sequence of conditions, and ends when its obligations are fulfilled or terminated. There is no valid “undefined” state, and there are no infinite loops. The contract must always be in one and only one state, known to all participants. That finiteness is what makes it enforceable.

When smart contract platforms made their languages Turing-complete, they replaced this natural constraint with computational flexibility. In doing so, they introduced uncertainty. Code could loop indefinitely, depend on external inputs, or produce unpredictable results. To control that risk, systems had to impose execution limits, gas fees, and complex runtime guards - all symptoms of an underlying mismatch between computation and commitment.

The consequences were more than technical. The focus shifted from verifying obligations to executing logic. Developers wrote code to simulate human intent, instead of representing it explicitly. Contracts became programs, and the role of participants was reduced to triggering or observing machine behavior. This transformation distanced digital contracts from the structure of real agreements and made them opaque to those who were supposed to trust them.

A better model is not one of computation but of verification through declaration.

A digital contract should declare its states, the conditions for transition, and the required verifiable claims for each step. Execution then becomes a matter of validation: confirming that the required signatures, proofs, and data have been provided. Once all conditions are satisfied, the contract issues its own verifiable outcome.

Such a contract might look like this in conceptual form:

Contract: PurchaseAgreement

States: [Proposed, Confirmed, Paid, Delivered, Completed]

Transitions:

- Proposed → Confirmed when buyer_signed && seller_signed

- Confirmed → Paid when payment_received

- Paid → Delivered when shipment_proof_verified

- Delivered → Completed when buyer_acknowledged

This model is finite, deterministic, and auditable. It mirrors the way legal agreements operate in the real world - clear, bounded, and subject to mutual understanding. It also enables composability: each state transition can be handled by a specialized sub-contract or service, while the main contract remains verifiable and simple.

The goal of digital contracts should never have been computational universality. It should have been verifiable completeness - the guarantee that every possible path through the contract leads to a clearly defined and final outcome.

Turing-completeness makes systems more powerful but less trustworthy. Finite verifiability makes them less flexible but infinitely more reliable. The choice between the two determines whether digital contracts become the next layer of the internet or remain an academic curiosity.

Real contracts are not programs; they are finite commitments between identifiable agents. Once that truth is reflected in their digital form, the complexity of smart contracts dissolves, and the clarity of verifiable interaction takes its place.

9. Data Must Be Shared to Be Trusted - and Why It Doesn’t

From the beginning, digital ledger systems were built on a simple intuition: if everyone can see and confirm the same data, then everyone can trust it. The model promised transparency, equality of information, and the elimination of hidden manipulation. Sharing data was equated with creating trust.

That assumption gave rise to the idea of a public ledger - a globally visible record of transactions, replicated across thousands of nodes. Each node could verify that its copy matched the others, ensuring that no single party could secretly alter history. The symmetry of access was supposed to replace the asymmetry of power.

Yet again, the premise was misplaced. Trust does not arise from everyone seeing the same data; it arises from everyone being able to verify that the data they do see is authentic and relevant.

Replication is not verification.

In the physical world, no one demands that every transaction be broadcast to everyone else to be trusted. When two parties sign a contract, they do not publish it to the public for validation. They rely on their ability to prove its authenticity if required. Trust is built through verifiable relationships, not universal visibility.

By confusing transparency with trust, early ledger systems created architectures that sacrificed privacy, confidentiality, and scalability. Every transaction had to be made public, and every participant had to carry a full copy of global history. Such systems could never reconcile the principles of privacy, regulation, and commercial discretion that real economies require.

The correct foundation for digital trust is verifiable data, not shared data. Information does not need to be public to be trustworthy; it only needs to be signed, timestamped, and cryptographically verifiable by those entitled to see it.

In a network of verifiable agents, each participant maintains its own micro-ledger - a private record of its transactions and states. Every entry in that ledger is supported by verifiable claims issued by counterparties. No third party needs to hold or confirm the same data, because authenticity is embedded in the data itself. Each agent can independently verify any statement it relies on, without consulting a central authority or a consensus network.

This model preserves privacy while ensuring trust. It also aligns with how real institutions operate. Banks, insurers, and companies do not rely on shared databases to conduct business; they rely on verifiable exchanges - messages, signatures, confirmations - that bind participants to their commitments. A verifiable network restores this natural order in digital form.

Transparency can still exist, but it becomes a matter of choice, not architecture. Participants can expose proofs, summaries, or credentials when required, without revealing their entire histories.

What replaces “shared truth” is individually verifiable truth - a much stronger foundation for privacy-preserving trust.

When data becomes verifiable rather than merely shared, the meaning of trust changes. It is no longer about believing what others believe, but about being able to confirm what you depend on.

This marks the end of the ledger paradigm and the beginning of a more human one - a network where trust travels with the data itself, not with its replication.

The future of digital trust will not be transparent by exposure but transparent by proof.

10. The Way Forward - Networks of Verifiable Agents

After more than a decade of experimentation, one lesson has become clear: digital trust cannot be programmed into networks through computation, consensus, or immutability. It must emerge from verifiable interaction between identifiable agents.

Every misconception explored earlier - that contracts must be executed by the network, that consensus produces trust, that tokens represent assets, or that data must be shared to be believed - stemmed from the same underlying omission. The early systems lacked a concept of agents capable of making verifiable claims, bearing responsibility for their actions, and interacting under well-defined contracts.

The way forward begins by restoring that concept.

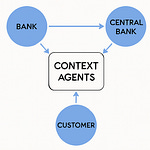

A verifiable agent is an identifiable digital entity - human, organizational, or autonomous - equipped with cryptographic keys that bind its identity to a secure root of trust. Each agent can issue, sign, and verify claims. When two or more agents interact, they do so through a digital contract that defines their obligations and outcomes as finite, verifiable states. The contract itself is an agent - a context of interaction that validates inputs and issues outputs in the form of new verifiable claims.

Together, these agents form a verifiable network - a system in which trust arises naturally from the authenticity of statements, not from the architecture of the network.

Every transaction, agreement, and event becomes a cryptographically verifiable interaction. Each participant maintains its own micro-ledger, recording the state of its relationships. There is no need for global consensus, centralized oversight, or public replication of data. The network’s integrity is guaranteed by the autonomy and accountability of its members.

This architecture also offers something more profound: interoperability of trust.

Because every claim carries its own proof, different domains, industries, or institutions can interact without sharing infrastructure or compromising privacy. A bank can settle with another bank, a business can trade with a supplier, or a regulator can audit compliance - all through verifiable claims exchanged among agents.

Such a system finally allows digital transactions to reach the same level of reliability that digital communication achieved decades ago. Just as the internet connected computers, a verifiable agent network connects commitments. It becomes an Internet Trust Layer - a universal layer for verifiable interaction across the digital economy.

Interestingly, this same foundation is now becoming essential for a very different field: Agentic Artificial Intelligence.

As AI systems gain autonomy and begin to act as independent agents, they too will need a network where identity, authorship, and accountability are verifiable. A self-directed AI agent must be able to sign its statements, prove its authority, and interact with human or organizational agents under contractually defined rules. Without such a trust layer, autonomous AI will remain ungrounded - capable of reasoning, but not of responsibility.

The architecture that enables verifiable digital contracts among humans is therefore the same one that will anchor trustworthy AI interactions in the future. The principle is universal: trust arises not from control or consensus, but from verifiable behavior among identifiable agents.

The path ahead is not about replacing institutions or intermediaries, but about giving every participant - human or digital - the ability to act independently and verifiably. That shift transforms the digital world from a collection of applications into an ecosystem of accountable agents.

This is how the long pursuit of digital trust finds its destination.

Not in a global computer, not in immutable ledgers, and not in anonymous consensus, but in a verifiable web of identities, claims, and contracts.

A network of agents, capable of proving what they do and why - the true foundation of the next digital transformation.

11. Conclusion - From Misconception to Maturity

The pursuit of digital contracts began with immense ambition. It sought to make trust programmable - to capture the logic of agreements, automate their execution, and remove the need for intermediaries. The goal was never misplaced. What went wrong was the path chosen to reach it.

For years, the field followed the logic of computers instead of the logic of commitments. It assumed that trust could be derived from computation, consensus, and code. Each step away from human accountability - anonymous execution, immutable data, global consensus, tokenized value - was a step away from how trust has always functioned in the real world.

And yet, every misconception taught something essential. By trying to compute trust, we discovered the limits of computation. By enforcing immutability, we learned the necessity of accountable change. By pursuing anonymity, we rediscovered the value of verifiable identity. Each wrong turn revealed what trust in the digital world must truly depend on: verification, not belief.

The architecture that finally resolves these contradictions is conceptually simple. It does not rely on collective computation or shared ledgers. It is built from agents - identifiable, autonomous entities capable of issuing and verifying cryptographically signed claims. Every contract is a finite verifiable process that defines the conditions under which claims are exchanged and validated. Every participant maintains its own verifiable history of actions - a private but auditable ledger of accountability.

Together, these agents form an Internet Trust Layer, a network where transactions are as verifiable as they are private, and where trust arises from the authenticity of interactions, not from the authority of a system. It is a return to the natural logic of the economy: relationships built on identity, intent, and verifiable performance.

The same foundation will also become essential for Agentic AI, as autonomous systems begin to act, transact, and negotiate on behalf of humans and organizations. They too must be part of a verifiable network, one that records what they do, why they do it, and under whose authority. Only then can autonomy coexist with accountability.

The digital contract revolution was delayed, not denied. The early detours were necessary; they clarified what does not work. Now that the misconceptions have been understood, the path ahead is clear. Trust in the digital age will not be achieved by encoding it into global ledgers or embedding it into programs. It will be achieved by enabling verifiable interaction among accountable agents.

The future of the digital economy - and of AI - depends on this principle.

When verification replaces belief, and when every action carries proof of authorship and intent, digital transactions can finally be as dependable as the physical ones they represent.

That is the moment when digital contracts cease to be a promise and become an infrastructure. And when the network of trust we have been imagining for decades finally becomes real.